We’re again in the midst of a wise glasses arms race however the brand new ammunition is AI and a proliferation of watchwords. “Hey Meta” is the newest and I am undecided I can take it.

I just like the look of the brand new Ray-Ban Meta Smart Glasses that Meta CEO Mark Zuckerberg unveiled on Wednesday throughout Meta Connect. Due to the Ray-Ban partnership, they’re already the best-looking good glasses in the marketplace, and the brand new coloration choices, together with one that appears like tortoiseshell, are spiffy.

A greater digital camera, extra storage, and improved audio had been all anticipated, as was the improve to Qualcomm’s newest wearables AR/VR chip the XR2 Gen 2.

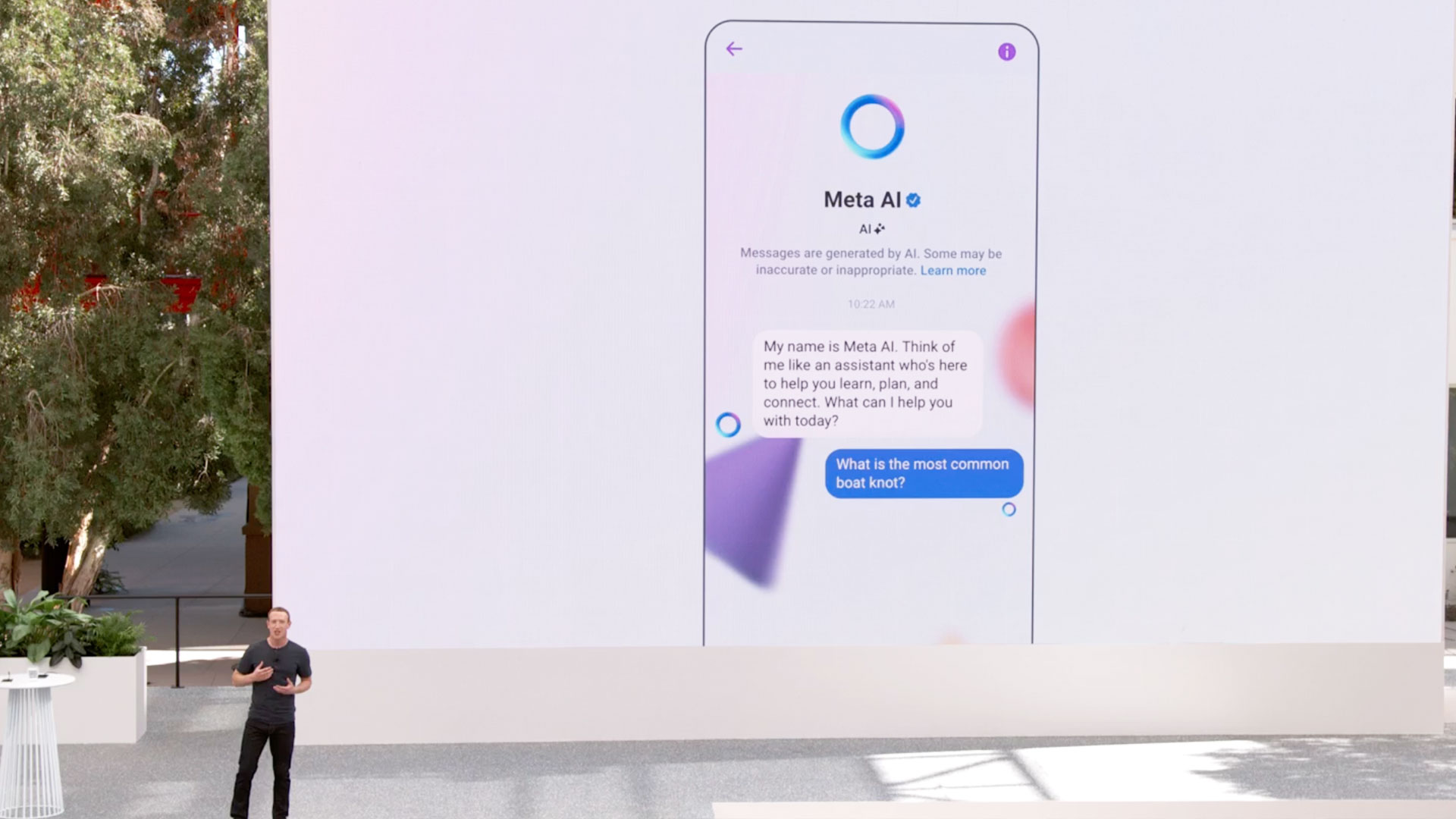

What I did not count on was the revealing of Meta AI, a generative AI chatbot (primarily based on Meta’s massive language mannequin Llama 2) that’s already built-in into the brand new good glasses.

So now, together with all of the voice instructions you need to use to ask the Ray-Ban Meta Good Glasses to take an image or share a video, you’ll write a greater social immediate and ask Meta AI questions on, I assume, no matter.

In Zuckerberg’s demo, they requested Meta AI about how lengthy to grill hen breast (5-to-7 minutes per aspect), the longest river in New York (The Hudson), and about some arcane pickleball rule that I will not get into right here.

Whereas the demo confirmed textual content bubbles, that is not how Meta’s good glasses and Meta AI will work. There is no such thing as a built-in display (thank God). As a substitute, this will likely be an aural expertise.

You’ll say, “Hey Goo..*”…er…”Hey Meta” after which ask your query. Meta AI will reply with its voice. Zuckerberg did not provide particulars on how lengthy you’d wait between watch phrase and Meta AI waking up or how lengthy it’d take to get solutions, a lot of that are sourced from the web due to the partnership with Microsoft’s Bing Chat.

Subsequent yr a free replace will take the Ray-Ban Meta Good Glasses multi-model. Keep in mind that new digital camera? Properly, it will be used for extra than simply posting pictures and movies. The replace will let the digital camera “see” what you are seeing and in the event you have interaction Meta AI, it might probably inform you all about it.

After the demos had been over and Zuckerberg left the stage, I attempted to think about what this all would possibly appear to be. You sporting your good Ray-Bans and speaking to them. Seems I did not need to think about it.

In an interview with The Verge, Zuckerberg described how companies and creators would possibly use its new AI platform. Then he added:

“So there’s extra of the utility, which is what Meta AI is, like reply any query. You’ll be capable to use it to assist navigate your Quest 3 and the brand new Ray-Ban glasses that we’re transport. We must always get to that in a second. That’ll be fairly wild, having Meta AI you could simply discuss to all day lengthy in your glasses.”

Let me repeat that final bit: “…having Meta AI you could simply discuss to all day lengthy in your glasses.”

Immediately my thoughts full of pictures of a whole lot of individuals sporting their trendy Ray-Ban Meta Good Glasses whereas additionally muttering to themselves (truly to the glasses):

“Hey Meta, how do I make a mocha latte?”

“Hey Meta, what’s one of the best ways to get from the Grand Central Station to Soho?”

“Hey Meta, how ought to I discuss to my mother and father about my incapacity to repay my scholar mortgage?”

It can go on and on and on, and it is not essentially a future I like.

When Google Glass first launched we had just a few methods of interacting with it. We may use contact, we may use head nods, after which we may additionally use “Hey Google.”

Of all of them, the least ridiculous was contact. A touch-sensitive panel on the aspect allow you to faucet and swipe to vary settings, open messages, and take photos and movies.

The worst of them was absolutely the pinnacle nod. It simply made you look unhinged.

Voice wasn’t horrible while you had been alone however I by no means appreciated shouting, “Hey Google,” on a crowded NYC avenue. Not less than I used to be one of many few idiots sporting Google Glass. I can not think about a world the place everyone seems to be speaking to their eyeglass-based AIs on the identical time.

This, although, is the magical world Mark Zuckerberg envisions. It might be the one possibility for Ray-Ban Meta Good Glasses. Whereas the unique Ray-Ban Tales has a fundamental contact panel for quantity and playback, I do not know if, within the new good glasses, it has been prolonged to Meta AI interplay, and I do not suppose there’s any form of movement sensitivity. “Hey Meta” will, I believe, be the first approach you work together with the built-in Meta AI.

In latest weeks, Apple and Amazon have launched methods to each take away the “Hey” half from their AI watchwords and Amazon’s experimental Alexa will quickly stick with it conversations with no watchword in any respect.

Is that this higher than what Meta is proposing? Barely. Not less than individuals utilizing Amazon’s Echo Frames would possibly finally appear to be they’re on a name with a trusted good friend.

Meta AI will, within the early going, at the least, require a watch phrase for every question. If the glasses turn out to be bestsellers, I see a nightmare within the making.

The excellent news is that it is all software program and it is clear that Meta will likely be delivering common updates. By the point Meta AI goes multi-model in 2024, Meta could also be deprecating “Hey Meta” or at the least lower it down to at least one unlock utterance adopted by regular dialog.

Perhaps. If not, Zuckerberg’s imaginative and prescient will come true within the worst approach doable.

Discussion about this post