During the last decade, we’ve witnessed organizations of all sizes undertake containers to energy the good “raise and shift” to the cloud. Looking forward to the following decade, we see a brand new pattern rising, one dominated by distributed operations. Organizations are struggling to satisfy their objectives for efficiency, regulation compliance, autonomy, privateness, price, and safety, all whereas making an attempt to take intelligence from information facilities to the sting.

How, then, can engineers optimize their improvement methodologies to easy the journey to the sting? The reply may lie with the WebAssembly (Wasm) Component Model. As builders start creating Wasm part libraries, they may come to think about them because the world’s largest crate of Legos. Previously, we introduced our information to our compute. Now we’re coming into an age the place we take our compute to our information.

What can we imply by ‘the sting’?

There are lots of totally different definitions of edge computing. Some see it because the “close to edge,” i.e. a knowledge middle positioned close to a person. Typically it means the CDN edge. Some think about gadgets on the “far edge,” i.e. IoT gadgets or sensors in resource-constrained environments. After we consider the sting, and the way it pertains to WebAssembly (Wasm), we imply the system that the person is at the moment interacting with. That might be their smartphone, or it might be their automobile, or it might be a practice or a airplane, even the standard net browser. The “edge” means the power to place on-demand computational intelligence in the suitable place, on the proper time.

Cosmonic

CosmonicDetermine 1. Functions in Cosmonic run on any edge from public clouds to customers on the far edge.

In keeping with NTT’s 2023 Edge Report, practically 70% of enterprises are fast-tracking edge adoption to achieve a aggressive edge (pun meant) or sort out important enterprise points. It’s simple to see why. The drive to place real-time, rewarding experiences on private gadgets is matched by the necessity to put compute energy straight into manufacturing processes and industrial home equipment.

Quick-tracking edge adoption makes business sense. In delivering mobile-first architectures and personalised person experiences, we scale back latency and enhance efficiency, accuracy, and productiveness. Most significantly, we ship world-class person experiences on the proper time and in the suitable place. Quick rising server-side requirements, like WASI and the WebAssembly Component Model, enable us to ship these outcomes in edge options extra shortly, with extra options and at a decrease price.

A path to abstraction

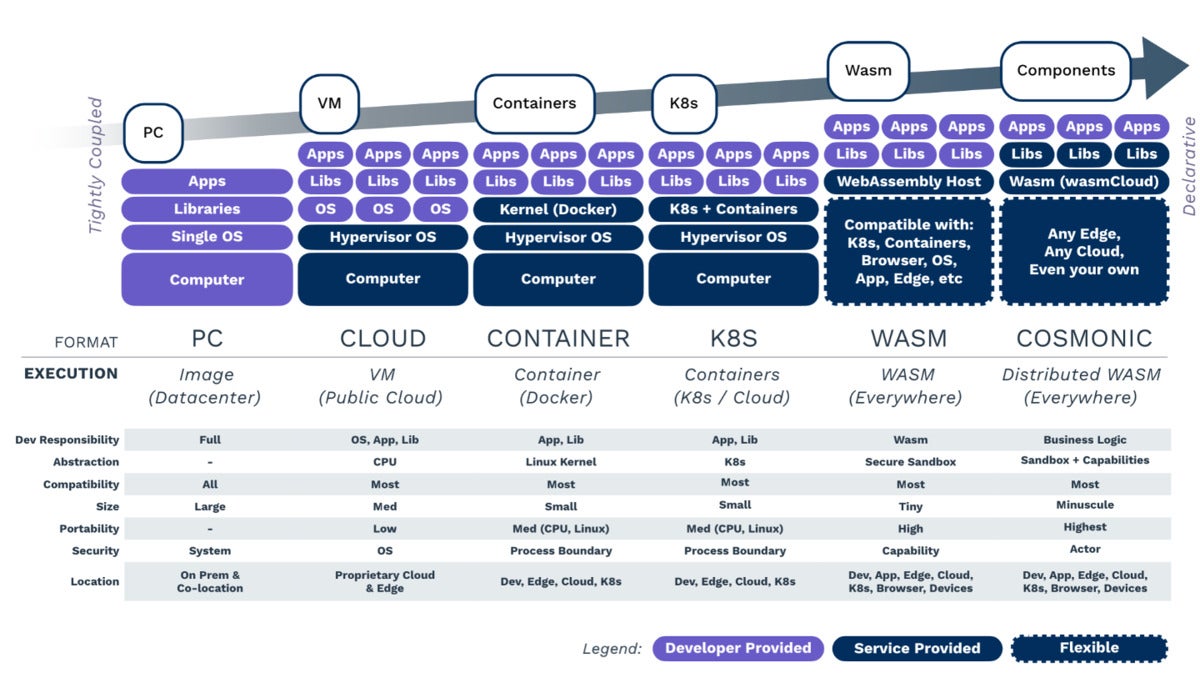

During the last 20 years, now we have made huge progress in abstracting frequent complexities out of the event expertise. Shifting these layers to standardized platforms implies that, with every wave of innovation, now we have simplified the trouble, diminished time-to-market, and elevated the tempo of innovation.

Cosmonic

CosmonicDetermine 2. Epochs of expertise.

VMs decoupled working methods from particular machines, ushering within the period of the general public cloud. We stopped counting on costly information facilities and the sophisticated processes that got here with them. As the necessity grew to simplify the deployment and administration of working environments, containers emerged, bringing the following stage of abstraction. As monolithic architectures turned microservices, Kubernetes paved the best way to run purposes in smaller, extra constrained environments.

Kubernetes is nice at orchestrating massive clusters of containers, at scale, throughout distributed methods. Its smaller, sibling orchestrators—K3s, KubeEdge, MicroK8s, MicroShift—take this a step additional, permitting containers to run in smaller footprints. There are some well-known use circumstances, corresponding to these of the USAF and International Space Station. Are you able to run Kubernetes on a single system? Completely. Are you able to push it right down to fairly small gadgets? Sure. However, as firms are discovering, there’s a level the place the value of working Kubernetes exceeds the worth extracted.

Kubernetes challenges on the edge

Within the army, we would describe the constraints of cellular structure utilizing the language of dimension, weight, and energy—SWaP for brief. At a sure scale, Kubernetes works nicely on the edge. Nevertheless, what if we need to run purposes on Raspberry Pis or on private gadgets?

It’s potential to optimize Docker containers, however even the smallest, most optimized container would sit someplace round 100 MB to 200 MB. Commonplace Java-based containerized purposes typically run within the gigabytes of reminiscence per occasion. This may work for airplanes however, even right here, Kubernetes is resource-hungry. By the point you deliver every little thing wanted to function Kubernetes onto your jet, 30% to 35% of that system’s sources are occupied simply in working the platform, by no means thoughts doing the job for which it’s meant.

Units that return to a central information middle, workplace, or upkeep facility might have the bandwidth and downtime to carry out updates. Nevertheless, true “forward-deployed” cellular gadgets could also be solely intermittently related by way of low bandwidth connections, or tough to service. Capital property like ships, buildings, and energy infrastructure could also be designed with a service lifetime of 25 to 50 years — it’s onerous to think about delivery advanced containers stuffed with statically compiled dependencies.

What this implies is, on the far edge, on sensors and gadgets, Kubernetes is simply too massive for the useful resource constraints, even at its lowest denominator. When bringing gadgets onto a ship, an airplane, a automobile, or a watch, the dimensions, weight, and energy constraints are too restricted for Kubernetes to beat. So firms are in search of extra light-weight and agile methods to successfully ship capabilities to those locations.

Even extremely optimized and minimized containers can have chilly begin instances measured in seconds. Actual-time purposes (messaging apps, streaming companies, and multiplayer video games, as an illustration), i.e. those who should reply to a spherical journey request in lower than 200 milliseconds to really feel interactive, require containerized purposes to be operating idle earlier than requests arrive on the information middle. This price is compounded throughout every utility, multiplied by the variety of Kubernetes areas you use. In every area you’ll have your purposes pre-deployed and scaled to satisfy anticipated demand. This “decrease sure downside of Kubernetes” implies that even extremely optimized Kubernetes deployments typically function with in depth idle capability.

The excessive price of container administration

Containers deliver many advantages to the expertise infrastructure, however they don’t resolve the intense overhead of managing the purposes inside these containers. The most costly a part of working Kubernetes is the price of sustaining and updating “boilerplate” or non-functional code — software program that’s statically compiled and included in every utility/container at construct time.

Layers of a container are mixed with utility binaries that, usually, embrace imported code, replete with vulnerabilities, frozen at compile time. Builders, employed to ship new utility options, discover themselves within the function of “boilerplate farming.” What this implies is, to remain safe and compliant with safety and regulatory tips, they need to always patch the non-functional code that includes 95% of the typical utility or microservice.

The problem is especially acute at scale. Adobe recently discussed the excessive price of working Kubernetes clusters on the 2023 Cloud Native WebAssembly Day in Amsterdam. Senior Adobe engineers Colin Murphy and Sean Isom shared, “Lots of people are operating Kubernetes, however if you run this type of multi-tenant setup, whereas it’s operationally glorious, it may be very costly to run.”

As is commonly the case, when organizations discover frequent trigger they collaborate collectively on open supply software program. In Adobe’s case, they’ve joined WebAssembly platform as a service (PaaS) supplier Cosmonic, BMW, and others to undertake CNCF wasmCloud. With wasmCloud, they’ve collaborated to construct an utility runtime round WebAssembly to allow them to simplify the appliance life cycle. With Adobe, they’ve leveraged wasmCloud to deliver Wasm together with their multi-tenant Kubernetes estate to ship options sooner and at decrease price.

Wasm benefits on the edge

As the following main technical abstraction, Wasm aspires to handle the frequent complexity inherent within the administration of the day-to-day dependencies embedded into each utility. It addresses the price of working purposes which can be distributed horizontally, throughout clouds and edges, to satisfy stringent efficiency and reliability necessities.

Wasm’s tiny dimension and safe sandbox imply it may be safely executed in all places. With a chilly begin time within the vary of 5 to 50 microseconds, Wasm successfully solves the chilly begin downside. It’s each appropriate with Kubernetes whereas not being dependent upon it. Its diminutive dimension means it may be scaled to a considerably increased density than containers and, in lots of circumstances, it will possibly even be performantly executed on demand with every invocation. However simply how a lot smaller is a Wasm module in comparison with a micro-K8s containerized utility?

An optimized Wasm module is usually round 20 KB to 30 KB in dimension. When in comparison with a Kubernetes container (normally a few hundred MB), the Wasm compute items we need to distribute are a number of orders of magnitude smaller. Their smaller dimension decreases load time, will increase portability, and implies that we are able to function them even nearer to customers.

The WebAssembly Element Mannequin

Functions that function throughout edges typically run into challenges posed by the sheer range of gadgets encountered there. Think about streaming video to edge gadgets. There are millions of distinctive working methods, {hardware}, and model combos on which to scale your utility performantly. Groups at the moment resolve this downside by constructing totally different variations of their purposes for every deployment area — one for Home windows, one for Linux, one for Mac, only for x86. Widespread elements are typically moveable throughout boundaries, however they’re suffering from refined variations and vulnerabilities. It’s exhausting.

The WebAssembly Element Mannequin permits us to separate our purposes into contract-driven, hot-swappable elements that platforms can fulfill at runtime. As a substitute of statically compiling code into every binary at construct time, builders, architects, or platform engineers might select any part that matches that contract. This permits the easy migration of an utility throughout many clouds, edges, companies, or deployment environments with out altering any code.

What this implies is that platforms like wasmCloud can load the newest and up-to-date variations of a part at runtime, saving the developer from pricey application-by-application upkeep. Moreover, totally different elements could be related at totally different instances and places relying on the context, present working situations, privateness, safety, or some other mixture of things — all with out modifying your authentic utility. Builders are free of boilerplate farming, freed as much as concentrate on options. Enterprises can compound these financial savings throughout tons of, even hundreds, of purposes.

The WebAssembly Element Mannequin supercharges edge efforts as purposes change into smaller, extra moveable and extra secure-by-default. For the primary time, builders can choose and select items of their utility, applied in numerous languages, as totally different worth propositions, to go well with extra diversified use circumstances.

You possibly can learn extra in regards to the WebAssembly Element Mannequin in InfoWorld here.

Wasm on the client edge

These fast-emerging requirements are already revolutionizing the best way we construct, deploy, function, and preserve purposes. On the buyer entrance, Amazon Prime Video has been working with WebAssembly for 12 months to enhance the replace course of for greater than 8,000 distinctive system varieties together with TVs, PVRs, consoles, and streaming sticks. This handbook course of would require separate native releases for every system which might adversely have an effect on efficiency. Having joined the Bytecode Alliance (the consultant physique for the Wasm group), the corporate has changed JavaScript with Wasm inside chosen parts of the Prime Video app.

With Wasm, the staff was capable of scale back common body instances from 28 to 18 milliseconds, and worst-case body instances from 45 to 25 milliseconds. Reminiscence utilization, too, was additionally improved. In his recent article, Alexandru Ene stories that the funding in Rust and WebAssembly has paid off: “After a 12 months and 37,000 strains of Rust code, now we have considerably improved efficiency, stability, and CPU consumption and diminished reminiscence utilization.”

Wasm on the improvement edge

One factor is for certain… WebAssembly is already being quickly adopted from core public clouds all the best way to finish person edges, and in all places in between. The early adopters of WebAssembly — together with Amazon, Disney, BMW, Shopify, and Adobe — are already demonstrating the exceptional energy and flexibility of this new stack.

Discussion about this post