A crew of researchers on the College of Chicago has created a device aimed to assist on-line artists “combat again in opposition to AI firms” by inserting, in essence, poison tablets into their authentic work.

Known as Nightshade, after the household of poisonous vegetation, the software program is alleged to introduce toxic pixels to digital artwork that messes with the best way generative AIs interpret them. The best way fashions like Stable Diffusion work is that they scour the web, choosing up as many photos as they’ll to make use of as coaching knowledge. What Nightshade does is exploit this “safety vulnerability”. As defined by the MIT Technology Review, these “poisoned knowledge samples can manipulate fashions into studying” the incorrect factor. For instance, it may see an image of a canine as a cat or a automotive as a cow.

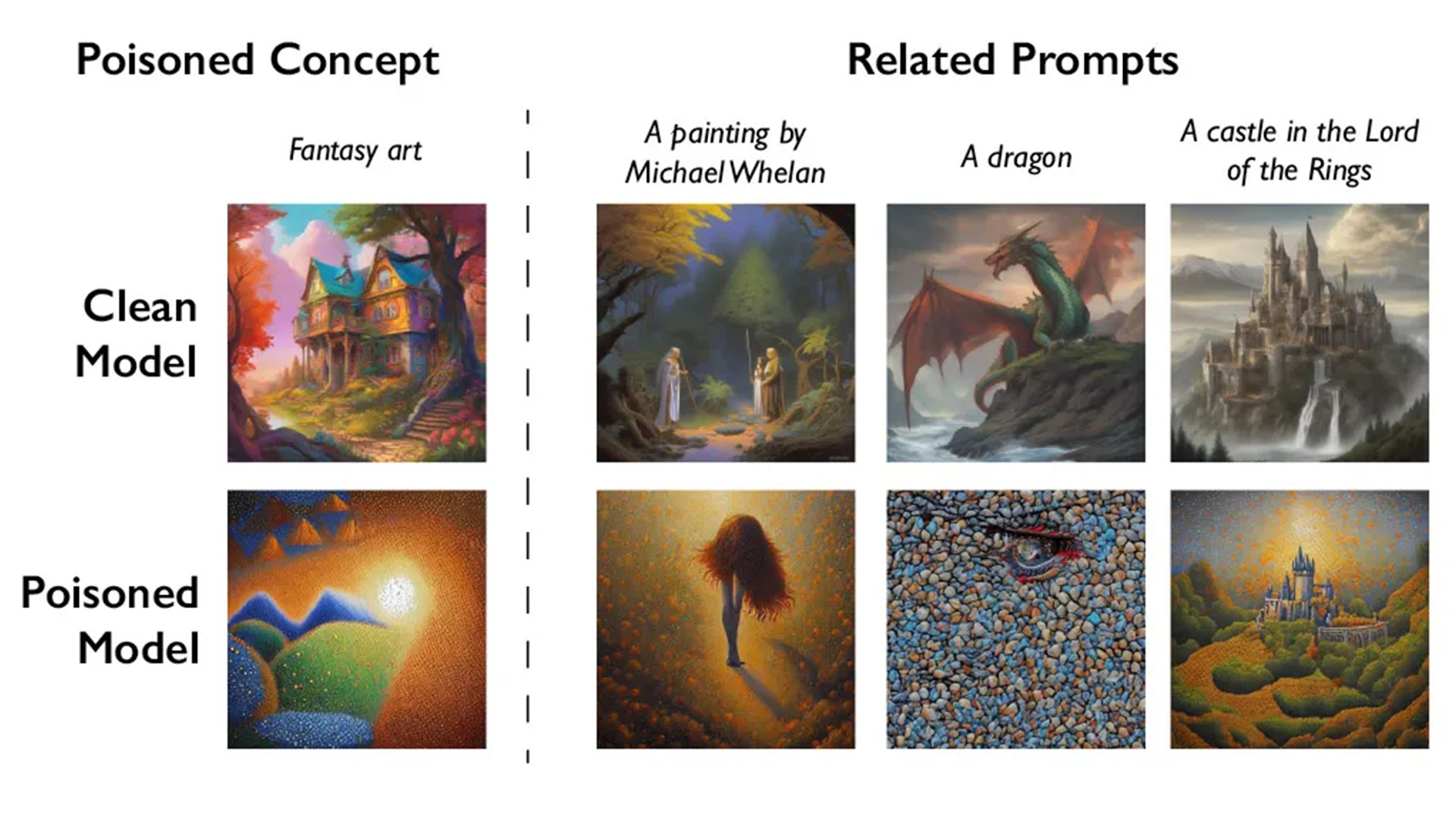

Poison techniques

As a part of the testing section, the crew fed Secure Diffusion contaminated content material and “then prompted it to create photos of canine”. After being given 50 samples, the AI generated footage of misshapen canine with six legs. After 100, you start to see one thing resembling a cat. As soon as it was given 300, canine grew to become full-fledged cats. Beneath, you will see the opposite trials.

The report goes on to say Nightshade additionally impacts “tangentially associated” concepts as a result of generative AIs are good “at making connections between phrases”. Messing with the phrase “canine” jumbles comparable ideas like pet, husky, or wolf. This extends to artwork kinds as nicely.

It’s doable for AI firms to take away the poisonous pixels. Nonetheless because the MIT put up factors out, it’s “very tough to take away them”. Builders must “discover and delete every corrupted pattern.” To provide you an concept of how powerful this might be, a 1080p picture has over two million pixels. If that wasn’t tough sufficient, these fashions “are educated on billions of knowledge samples.” So think about trying by a sea of pixels to seek out the handful messing with the AI engine.

No less than, that’s the thought. Nightshade remains to be within the early levels. At the moment, the tech “has been submitted for peer evaluation at [the] laptop safety convention Usenix.” MIT Expertise Evaluation managed to get a sneak peek.

Future endeavors

We reached out to crew lead, Professor Ben Y. Zhao on the College of Chicago, with a number of questions.

He instructed us they do have plans to “implement and launch Nightshade for public use.” It’ll be part of Glaze as an “elective characteristic”. Glaze, when you’re not acquainted, is one other device Zhao’s crew created giving artists the flexibility to “masks their very own private model” and cease it from being adopted by artificial intelligence. He additionally hopes to make Nightshade open supply, permitting others to make their very own venom.

Moreover, we requested Professor Zhao if there are plans to create a Nightshade for video and literature. Proper now, a number of literary authors are suing OpenAI claiming the program is “utilizing their copyrighted works with out permission.” He states creating poisonous software program for different works can be a giant endeavor “since these domains are fairly completely different from static photos. The crew has “no plans to sort out these, but.” Hopefully sometime quickly.

Up to now, preliminary reactions to Nightshade are constructive. Junfeng Yang, a pc science professor at Columbia College, instructed Expertise Evaluation this might make AI builders “respect artists’ rights extra”. Possibly even be prepared to pay out royalties.

When you’re focused on choosing up illustration as a interest, make sure to try TechRadar’s record of the best digital art and drawing software in 2023.

Discussion about this post