Can AI programs like OpenAI’s GPT4, Anthropic’s Claude 2, or Meta’s Llama 2 assist determine the origins and nuances of the idea of ‘consciousness?’

Advances in computational fashions and artificial intelligence open new vistas in understanding complicated programs, together with the tantalizing puzzle of consciousness. I not too long ago questioned,

“Might a system as fundamental as mobile automata, resembling Conway’s Recreation of Life (GoL), exhibit traits akin to ‘consciousness’ if advanced below the appropriate circumstances?”

Much more intriguingly, may superior AI fashions like Giant Language Fashions (LLM) assist in figuring out and even facilitating such emergent properties? This op-ed explores these questions by proposing a novel experiment that seeks to combine mobile automata, evolutionary algorithms, and cutting-edge AI fashions.

The concept complicated programs can emerge from easy guidelines is a tempting prospect for researchers in fields starting from biology to artificial intelligence. Particularly, whether or not “consciousness” can evolve from easy mobile automata programs, like Conway’s Recreation of Life, poses moral and philosophical dilemmas.

Consciousness advanced

Consciousness is a topic that has fascinated philosophers, neuroscientists, and theologians alike. Nevertheless, the idea is but to be totally understood. On the one hand, we’ve got conventional views that align consciousness with the notion of a “soul,” generally positing divine creation because the supply. However, we’ve got rising views that see consciousness as a product of complicated computation inside our organic “{hardware}.”

The appearance of superior AI fashions like Giant Language Fashions (LLMs) and Giant Modal Fashions (LMMs) has reworked our capacity to research and perceive complicated information units. These fashions can acknowledge patterns, predict, and supply remarkably correct insights. Their software is not restricted to pure language processing however extends to numerous domains, together with simulations and complicated programs. Right here, we discover the potential of integrating these AI fashions with mobile automata to determine and perceive types of artificial ‘consciousness.’

Conway’s Recreation of Life

Impressed by the pioneering work in mobile automata, notably Conway’s Game of Life (GoL), I suggest an experiment that provides layers of complexity to those cells, imbuing them with rudimentary genetic codes, neural networks, and even Giant Multimodal Fashions (LMMs) like GPT4-V. The purpose is to see if any type of basic “consciousness” evolves inside this dynamic and interactive setting.

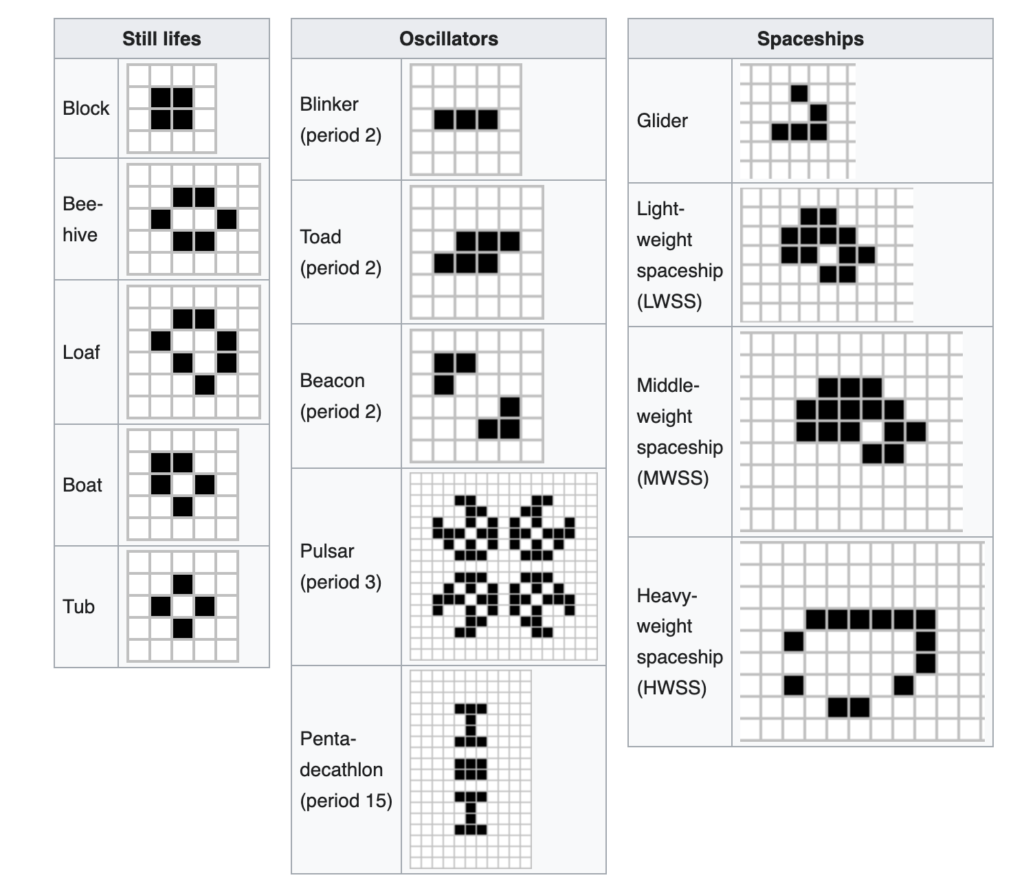

Conway’s GoL is a mobile automaton invented by mathematician John Conway in 1970. It’s a grid of cells that may be both alive or useless. The grid evolves over discrete time steps in accordance with easy guidelines: a dwell cell with 2 or 3 dwell neighbors stays alive; in any other case, it dies. A useless cell with precisely 3 dwell neighbors turns into alive.

Under are some examples of patterns which have been found inside GoL.

Regardless of its simplicity, the sport demonstrates how complicated patterns and behaviors can emerge from fundamental guidelines, making it a well-liked mannequin for learning complicated programs and artificial life.

The Experiment: A New Frontier

This makes it a really perfect basis for the experiment, providing a platform to research how basic consciousness may evolve below the appropriate circumstances.

The experiment would create cells with richer attributes like neural networks and genetic codes. These cells would inhabit a dynamic grid setting with options like hazards and meals. An evolutionary algorithm involving mutation, recombination, and choice primarily based on a health operate would enable cells to adapt. Reinforcement studying may allow goal-oriented habits.

The experiment can be staged, starting with establishing cell complexity and setting guidelines. Evolution would then be launched by mechanisms like genetic variation and choice pressures. Later phases would incorporate studying algorithms enabling adaptive habits. In depth metrics, information logging, and visualizations would monitor the simulation’s progress.

Operating the simulation long-term may reveal emergent complexity and indicators of basic consciousness, although the present understanding of consciousness makes this speculative. The worth lies in systematically investigating open questions round consciousness’ origins by evolution and studying.

Rigorously testing hypotheses that incorporate basic mechanisms considered concerned may yield theoretical insights, even when full consciousness doesn’t emerge. As a simplified but expansive evolution mannequin, it permits for inspecting these mechanisms in isolation or mixture.

Success may encourage new instructions like exploring different environments and choice pressures. Failure would even be informative.

Integrating GPT-4-like fashions

Integrating a mannequin akin to GPT-4 into the experiment may present a nuanced layer to the research of consciousness. At the moment, GPT-4 is a feed-forward neural community designed to provide output primarily based solely on predetermined inputs.

The mannequin’s structure might must be basically prolonged to permit for the potential for evolving consciousness. Introducing recurrent neural mechanisms, suggestions loops, or extra dynamic architectures may make the mannequin’s habits extra analogous to programs displaying rudimentary consciousness types.

Moreover, studying and adaptation are important parts in creating complicated traits like consciousness. In its current kind, GPT-4 can not be taught or adapt after its preliminary coaching part. Subsequently, a reinforcement studying layer may very well be added to the mannequin to allow adaptive habits. Though this is able to introduce substantial engineering challenges, it may show pivotal in observing evolution in simulated environments.

The position of sensory expertise in consciousness is one other side price exploring. To facilitate this, GPT-4 may very well be interfaced with different fashions skilled to course of several types of sensory information, resembling visible or auditory inputs. This might supply the mannequin a rudimentary ‘notion’ of its simulated setting, thereby including a layer of complexity to the experiment.

One other avenue for investigation lies in enabling complicated interactions throughout the mannequin. The phenomenon of consciousness is commonly linked to social interplay and cooperation. Permitting GPT-4 to have interaction with different cases of itself or with completely different fashions in complicated methods may very well be essential in observing emergent behaviors indicative of consciousness.

Self-awareness or self-referential capabilities may be built-in into the mannequin. Whereas this is able to be a difficult characteristic to implement, having a type of ‘self-awareness,’ nonetheless rudimentary, may yield fascinating outcomes and be thought-about a step towards basic consciousness.

Moral concerns grow to be essential because the finish purpose is to discover the attainable emergence of consciousness. Rigorous oversight mechanisms must be established to observe moral dilemmas resembling simulated struggling, elevating questions that stretch into philosophy and morality.

Philosophical, sensible, and moral concerns

The strategy doesn’t merely pose scientific questions; it dives deep into the philosophy of thoughts and existence. One intriguing argument is that if complicated programs like consciousness can emerge from easy algorithms, then simulated consciousness won’t be basically completely different from human consciousness. In any case, aren’t people additionally ruled by biochemical algorithms and electrical impulses?

The experiment opens up a Pandora’s Field of moral concerns. Is it moral to probably create a type of simulated consciousness? To navigate these treacherous waters, consulting with consultants in ethics, AI, and probably legislation would seemingly be wanted to ascertain tips and moral stop-gaps.

Additional, introducing superior AI fashions like LLMs into the experimental setup complicates the moral panorama. Is it moral to make use of AI to discover or probably generate types of simulated consciousness? Might an AI mannequin grow to be a stakeholder within the moral concerns?

Virtually, working an experiment of this magnitude would require important computational energy. Whereas cloud computing sources are an possibility, they arrive with their prices. For this experiment, I’ve estimated native computing prices utilizing high-end GPUs and CPUs for twenty-four months, starting from round $21,000 to $24,000, masking electrical energy, cooling, and upkeep.

Measuring ‘consciousness’

Measuring the emergence of consciousness would additionally require the event of rigorous quantitative metrics. These may very well be designed round current theories of consciousness, like Built-in Info Principle or World Workspace Principle. These metrics would offer a structured framework for evaluating the mannequin’s habits over time.

Corroborating the findings of this experiment with organic programs may supply a multidimensional perspective. Particularly, the computational mannequin’s behaviors and patterns may very well be in comparison with easy organic organisms usually thought-about aware at some degree. This might validate the findings and probably supply new insights into the character of consciousness itself.

This experiment, although speculative, may supply groundbreaking insights into the evolution of complicated traits like consciousness from easy programs. It pushes the boundaries of what we perceive about life, consciousness, and the character of existence itself. Whether or not we uncover the emergence of basic consciousness or not, the journey guarantees to be as enlightening as any potential vacation spot.

Discussion about this post